Megatron-LM源码系列(六):Distributed-Optimizer分布式优化器实现Part1

1. 使用说明

在megatron中指定--use-distributed-optimizer就能开启分布式优化器,

参数定义在megatron/arguments.py中。分布式优化器的思路是将训练中的优化器状态均匀地分布到不同数据并行的rank结点上,相当于开启ZERO-1的训练。

1 | group.add_argument('--use-distributed-optimizer', action='store_true', |

在使用--use-distributed-optimizer, 同时会check两个参数

args.DDP_impl == 'local'(默认开启)和args.use_contiguous_buffers_in_local_ddp(默认开启)。

1 | # If we use the distributed optimizer, we need to have local DDP |

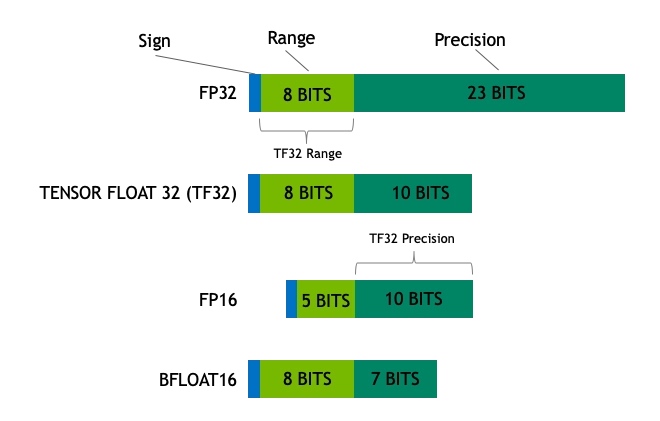

分布式优化器节省的理论显存值依赖参数类型和梯度类型,以下是每一个parameter对应占用的理论字节数(d表示数据并行的size大小,也就是一个数据并行中的卡数,

等于 \(TP \times PP\) ):

| 训练数据类型 | Non-distributed optim(单位Byte) | Distributed optim(单位Byte) |

|---|---|---|

| float16 param, float16 grads | 20 | 4 + 16/d |

| float16 param, fp32 grads | 18 | 6 + 12/d |

| fp32 param, fp32 grads | 16 | 8 + 8/d |